{{

}}

Citizen science for plant identification: insights from Pl@ntnet

Joseph Salmon

IMAG, Univ Montpellier, CNRS, Montpellier

Institut Universitaire de France (IUF)

Mainly joint work with:

- Tanguy Lefort (Univ. Montpellier, IMAG)

- Benjamin Charlier (Univ. Montpellier, IMAG)

- Camille Garcin (Univ. Montpellier, IMAG)

- Maximilien Servajean (Univ. Paul-Valéry-Montpellier, LIRMM, Univ. Montpellier)

- Alexis Joly (Inria, LIRMM, Univ. Montpellier)

and from

- Pierre Bonnet, Hervé Goëau (CIRAD, AMAP)

- Antoine Affouard, Jean-Christophe Lombardo, Titouan Lorieul, Mathias Chouet (Inria, LIRMM, Univ. Montpellier)

Pl@ntNet description

Pl@ntNet: ML for citizen science

A citizen science platform using machine learning to help people identify plants with their mobile phones

- Website: https://plantnet.org/

- Note: no mushroom identification!

https://identify.plantnet.org/stats

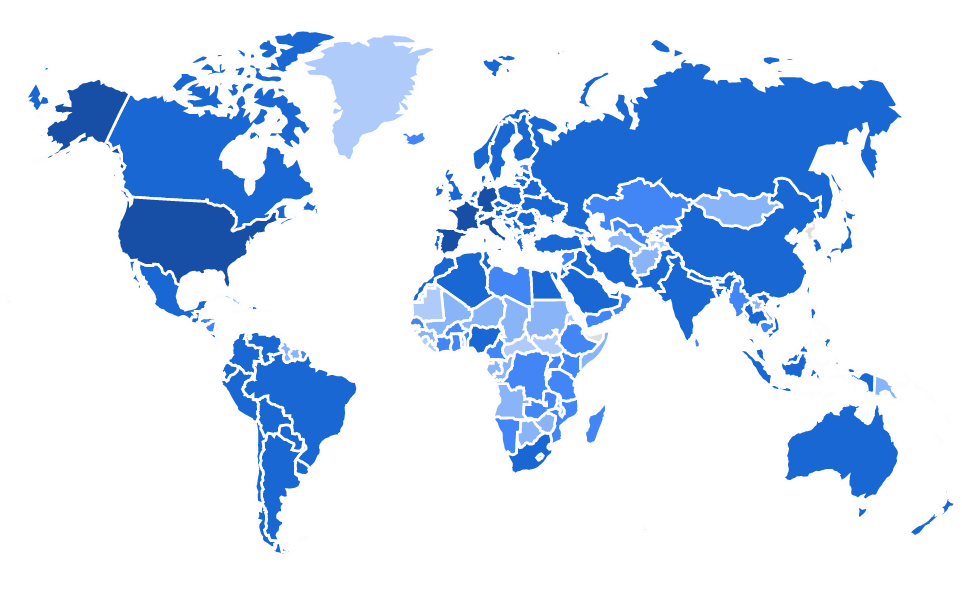

- Start in 2011, now 25M+ users

- 200+ countries

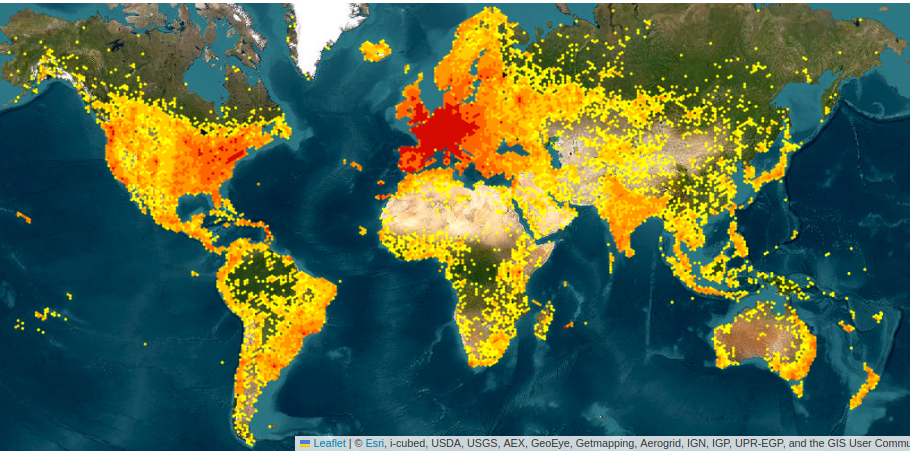

- Up to 2M image uploaded/day

- 50K species

- 1B+ total images

- 10M+ labeled / validated

Pl@ntNet & Cooperative Learning

Note: I am mostly innocent; started working with the Pl@ntNet team in 2020

Motivation: excellent app … but not a perfect app; How to improve?

- Community effort: machine learning, ecology, engineering, amateurs

- Many open problems (theoretical/practical)

- Need for methodological/computational breakthrough

Contributions

- Pl@ntNet-300K (Garcin et al. 2021): Creation and release of a large-scale dataset sharing the same property as Pl@ntNet; available for the community to improve learning systems

- Learning & crowd-sourced data (Lefort et al. 2024) and (Lefort et al. 2025): How to leverage multiple labels per image to improve the model? Need to assert quality: the workers, the images/labels, the model, etc.

- Top-K learning (Garcin et al. 2022): Driven by theory, introduce new loss to cope with Pl@ntNet constraints to output multiple labels (e.g., UX, Deep Learning framework, etc.)

Dataset release: Pl@ntNet-300K

Popular datasets limitations:

- structure of labels too simplistic (CIFAR-10, CIFAR-100)

- might have tasks too easy to discriminate

- might be too well-balanced (same number of images per class)

- contains duplicate, low-quality, or irrelevant images

Motivation:

release a large-scale dataset sharing similar features as the Pl@ntNet dataset to foster research in plant identification

\(\implies\) Pl@ntNet-300K (Garcin et al. 2021)

Intra-class variability

Inter-class ambiguity

Sampling bias

Top-5 most observed plant species in Pl@ntNet (13/04/2024):

25134 obs.

24720 obs.

24103 obs.

23288 obs.

23075 obs.

10753 obs.

Centaurea jacea

6 obs.

Cenchrus agrimonioides

8376 obs.

Magnolia grandiflora

413 obs.

Moehringia trinervia

Many more biases …

- Selection bias

- Convenience sampling: easily vs. hardly accessible

- Preference for certain species: visibility / ease of identification

- Subjective bias: selection based on personal judgment, may not be random or representative

- Rare species: rare or endangered species may be under-represented

- Temporal bias / seasonal variation: seasonal changes in plant characteristics

- …

Construction of Pl@ntNet-300K

- Earth: 300K+ species

- Pl@ntNet: 50K+ species

- Pl@ntNet-300K: 1K+ species

Note: long tail preserved by genera subsampling

Caracteristics:

- 306,146 color images

- Size : 32 GB

- Labels: K=1,081 species

- Required 2,079,003 volunteers “workers”

Zenodo, 1 click download

https://zenodo.org/record/5645731

Code to train models

https://github.com/plantnet/PlantNet-300KVotes, labels & aggregation

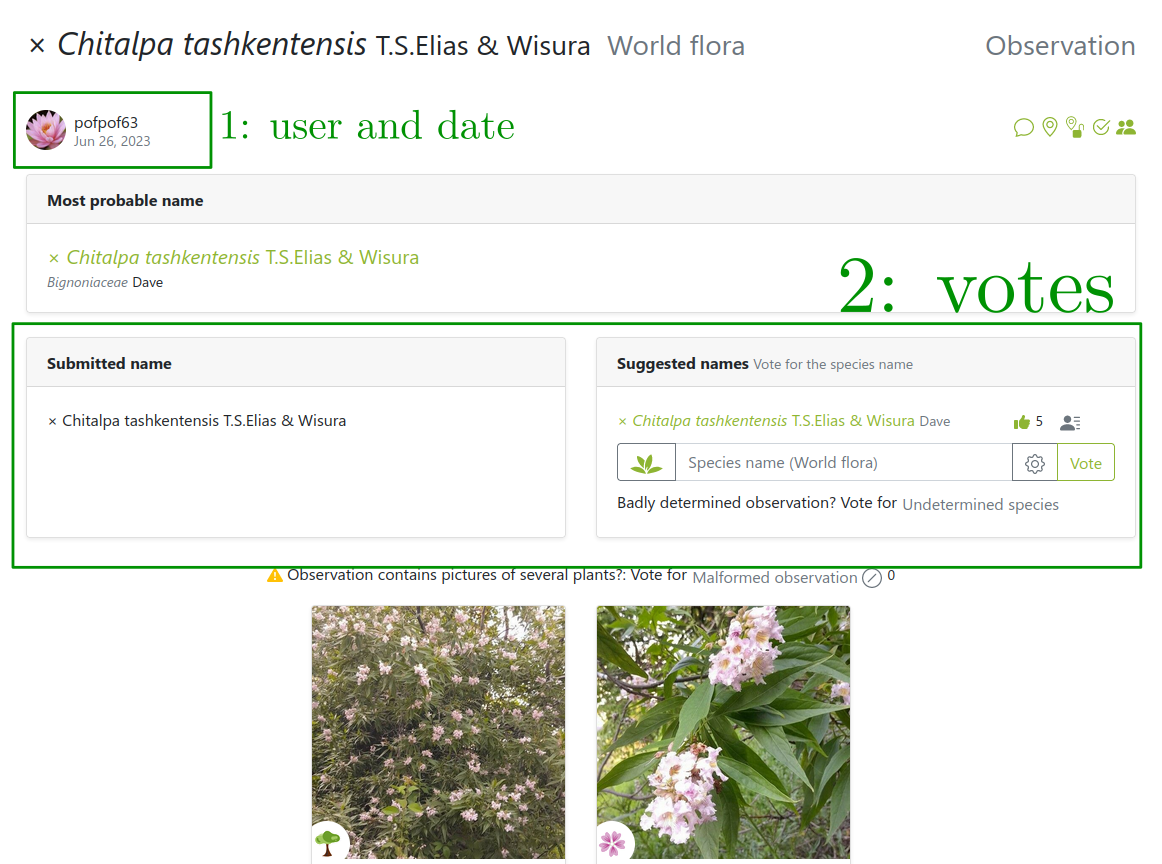

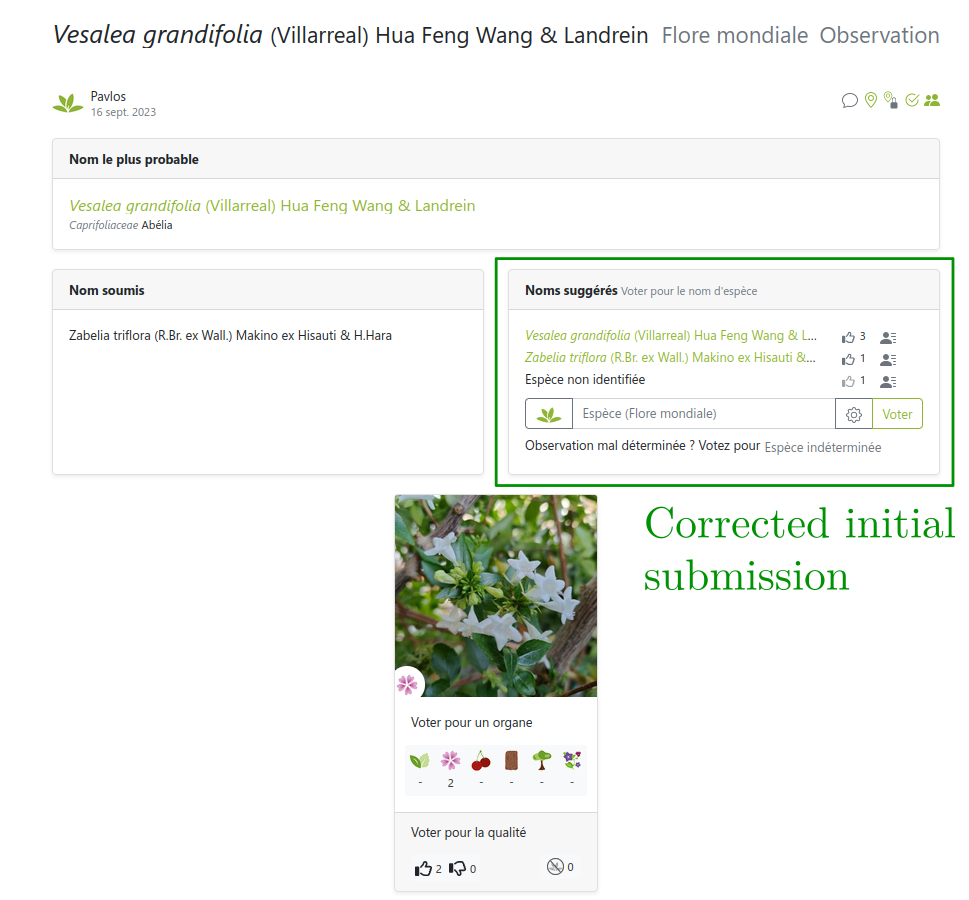

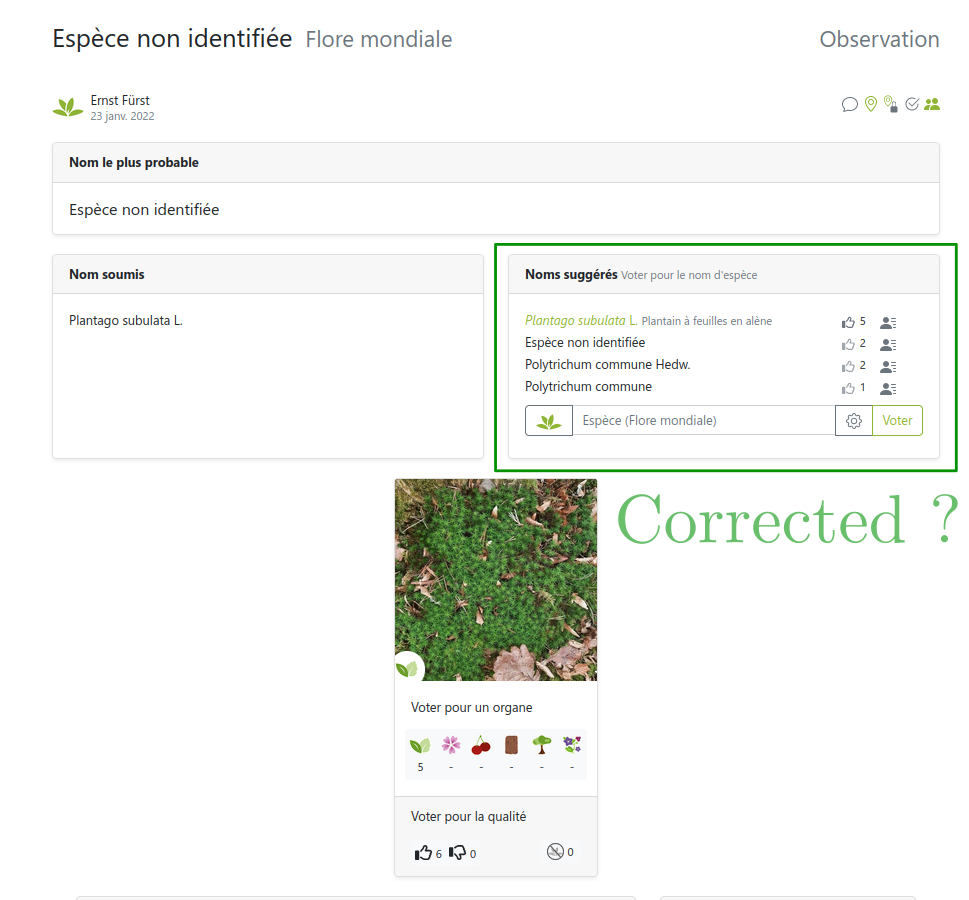

Images from users… so are the labels!

But users can be wrong or not experts

Several labels can be available per image!

But sometimes users can’t be trusted

Link: https://identify.plantnet.org/weurope/observations/1012500059

The good, the bad and the ugly

- The good: fast, easy, cheap data collection

- The bad: noisy labels with different levels of expertise

- The ugly: (partly) missing theory, ad-hoc methods for noisy labels

(Weighted) Majority Vote

Objective

Provide for all images \(x_i\) an aggregated label \(\hat{y}_i\) based on the votes \(y^{u}_i\) of the workers \(u \in \mathcal{U}\).

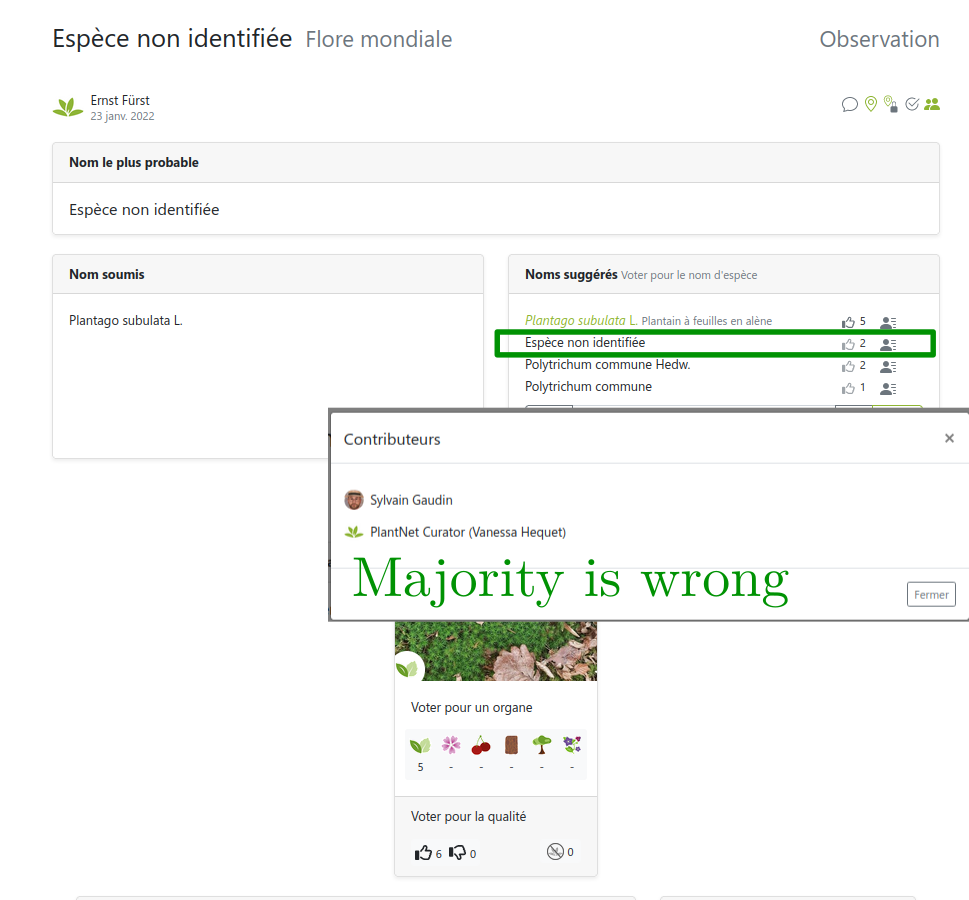

Majority Vote (MV): intuitively

Naive idea: make users vote and take the most voted label for each image

Majority Vote : formally

Naive idea: make users vote and take the most voted label for each image

\[ \forall x_i \in \mathcal{X}_{\text{train}},\quad \hat y_i^{\text{MV}} = \mathop{\mathrm{arg\,max}}_{k\in [K]} \Big(\sum\limits_{u\in\mathcal{U}(x_i)} {1\hspace{-3.8pt} 1}_{\{y^{u}_i=k\}} \Big) \]

Properties:

✓ simple

✓ adapted for any number of users

✓ efficient, few labelers sufficient (say < 5, Snow et al. 2008)

✗ ineffective for borderline cases

✗ suffer from spammers / adversarial users

Constraints: wide range of skills, different levels of expertise

Modeling aspect: add a user weight to balance votes

Assume given weights \((w_u)_{u\in\mathcal{U}}\) for now

Weighted Majority Vote (WMV): example

The label confidence \(\mathrm{conf}_{i}(k)\) of label \(k\) for image \(x_i\) is the sum of the weights of the workers who voted for \(k\): \[ \forall k \in [K], \quad \mathrm{conf}_{i}(k) = \sum\limits_{u\in\mathcal{U}(x_i)} w_u {1\hspace{-3.8pt} 1}_{\{y^{u}_i=k\}} \]

Size effect:

- more votes \(\Rightarrow\) more confidence

- more expertise \(\Rightarrow\) more confidence

The label accuracy \(\mathrm{acc}_{i}(k)\) of label \(k\) for image \(x_i\) is the normalized sum of weights of the workers who voted for \(k\): \[ \forall k \in [K], \quad \mathrm{acc}_{i}(k) = \frac{\mathrm{conf}_i(k)}{\sum\limits_{k'\in [K]} \mathrm{conf}_i(k')} \]

Interpretation:

- only the proportion of the weights matters

Weighted Majority Vote (WMV)

Majority voting but weighted by a confidence score per user \(u\): \[ \forall x_i \in \mathcal{X}_{\texttt{train}},\quad \hat{y}_i^{\textrm{WMV}} = \mathop{\mathrm{arg\,max}}_{k\in [K]} \Big(\sum\limits_{u\in\mathcal{U}(x_i)} w_u {1\hspace{-3.8pt} 1}_{\{y^{u}_i=k\}} \Big) \]

Note: the weighted majority vote can be computed from confidence or accuracy \[ \hat{y}_i^{\textrm{WMV}} = \mathop{\mathrm{arg\,max}}_{k\in [K]} \Big( \mathrm{conf}_i(k) \Big) = \mathop{\mathrm{arg\,max}}_{k\in [K]} \Big(\mathrm{acc}_i(k) \Big) \]

Two pillars for validating a label \(\hat{y}_i\) for an image \(x_i\) in Pl@ntNet :

Expertise: labels quality check

keep images with label confidence above a threshold \(\theta_{\text{conf}}\), validate \(\hat{y}_i\) when \[ \boxed{\mathrm{conf}_{i}(\hat{y}_i) > \theta_{\text{conf}}} \]

Consensus: labels agreement check

keep images with label accuracy above a threshold \(\theta_{\text{acc}}\), validate \(\hat{y}_i\) when \[ \boxed{\mathrm{acc}_{i}(\hat{y}_i) > \theta_{\text{acc}}} \]

Pl@ntNet label aggregation (EM algorithm)

Weighting scheme: weight user vote by its number of identified species

Weights example

- \(n_{\mathrm{user}} = 6\)

- \(K=3\) : Rosa indica, Ficus elastica, Mentha arvensis

- \(\theta_{\text{conf}}=2\) and \(\theta_{\text{acc}}=0.7\)

Take into account 4 users out of 6

Take into account 4 users out of 6

Invalidated label: Adding User 5 reduces accuracy

Label switched: User 6 is an expert (even self-validating)

Choice of weight function

\[ f(n_u) = n_u^\alpha - n_u^\beta + \gamma \text{ with } \begin{cases} \alpha = 0.5 \\ \beta=0.2 \\ \gamma=\log(1.7)\simeq 0.74 \end{cases} \]

Other existing strategies

- Majority Vote (MV)

Worker agreement with aggregate (WAWA): 2-step method

- Majority vote

- Weight users by how much they agree with the majority

- Weighted majority vote

- TwoThrid (iNaturalist)

- Need 2 votes

- \(2/3\) of agreements

Pl@ntNet labels release: South West. European flora

Extracting a subset of a Pl@ntNet votes

- South Western European flora observations since \(2017\)

- \(~800K\) users answered more than \(11K\) species

- \(~6.6M\) observations

- \(~9M\) votes casted

- Imbalance: 80% of observations are represented by 10% of total votes

No ground truth available to evaluate the strategies

Test sets without ground truth

- Extract \(98\) experts: Tela Botanica + prior knowledge (P. Bonnet)

Pl@ntNet South Western European flora

Accuracy and number of classes kept

- Pl@ntNet aggregation performs better overall

- TwoThird is highly impacted by the reject threshold

- In ambiguous settings, strategies weighting users are better

Performance: Precision, recall and validity

- Pl@ntNet aggregation performs better overall

- TwoThird has good precision but bad recall

- We indeed remove some data but less than TwoThird

Why?

- More data

- Could correct non-expert users

- Could invalidate bad quality observation

Main danger

- Model collapse (Shumailov et al. 2024): users are already guided by AI predictions

Potential strategies to integrate the AI vote

- AI as worker: naive integration

- AI fixed weight:

- weight fixed to \(1.7\)

- can invalidate two new users but is not self-validating

- AI invalidating:

- weight fixed to \(1.7\)

- can only invalidate observation

- AI confident:

- weight fixed to \(1.7\)

- can participate if confidence in prediction high enough (\(\theta_{\text{score}}\))

\(\Longrightarrow\) confident AI with \(\theta_{\text{score}}=0.7\) performs best… but invalidating AI could be preferred for safety \(\Longleftarrow\)

Aggregating labels: a new open source tool

peerannot: Python library to handle crowdsourced data

Conclusion

- Challenges in citizen science: many and varied (need more attention)

- Crowdsourcing / Label uncertainty: helpful for data curation

- Improved data quality \(\implies\) improved learning performance

Dataset release:

- Pl@ntNet-300K: https://zenodo.org/record/5645731

- Pl@ntNet SWE flora: https://zenodo.org/records/10782465

Code release:

- Toolbox: https://peerannot.github.io/

- Some benchmarks: https://benchopt.github.io/

Future work

- Uncertainty quantification

- Improve robustness to adversarial users

- Leverage gamification for more quality labels theplantgame.com