{{

}}

Joseph Salmon

IMAG, Univ Montpellier, CNRS, Inria, Montpellier, France

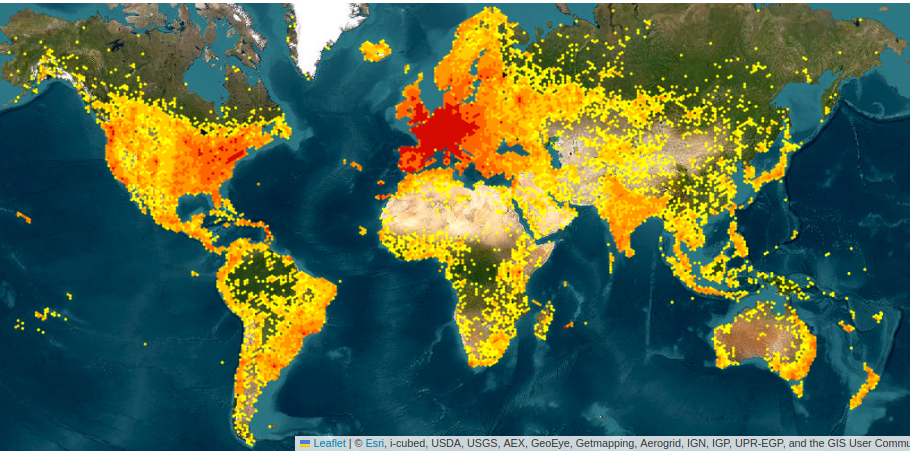

Apprentissage automatique & sciences participatives

Une plateforme de science citoyenne utilisant l’apprentissage automatique pour aider les gens à identifier les plantes avec leur téléphone

- Lieu de naissance: Montpellier

- Date de naissance: 2011

- Site web: https://plantnet.org/

- Note: pas de champignons, désolé!

- 25 Millions d’utilisateurs

- 200+ pays

- Jusqu’à 2 Millions d’images téléchargées par jour

- 50 000 espèces de plantes (sur 300 000)

- 1.2 Milliards d’images

- 20 Millions étiquetées / validées

- 20 chercheurs / ingénieurs (à Montpellier)

How we started ?

Conversation with Alex (Gramfort) while:

- working on speeding-up Lasso solvers (for application in neuro-imaging)

- being both grumpy at the review process (as reviewers)

We talked about launching a benchmarking platform for optimization algorithms, but got too busy for a while.

and then Thomas (Moreau) arrived, soon backed-up by Mathurin (Massias)

My contributions

- Creating, first benchmarks and solvers (around l1 solvers etc.)

- Helping Writing NeurIPS paper

- Logo design (Inkscape / SVG)

- Organizing sprints (two in Montpellier, then they did a bigger on in Paris)

- Funding people on it (especially Mission Complémentaire de Code, Tanguy Le Fort, Amélie Vernay)

- Talking about it, e.g., answer to Donoho’s paper “Data Science at the Singularity” in Harvard Data Science

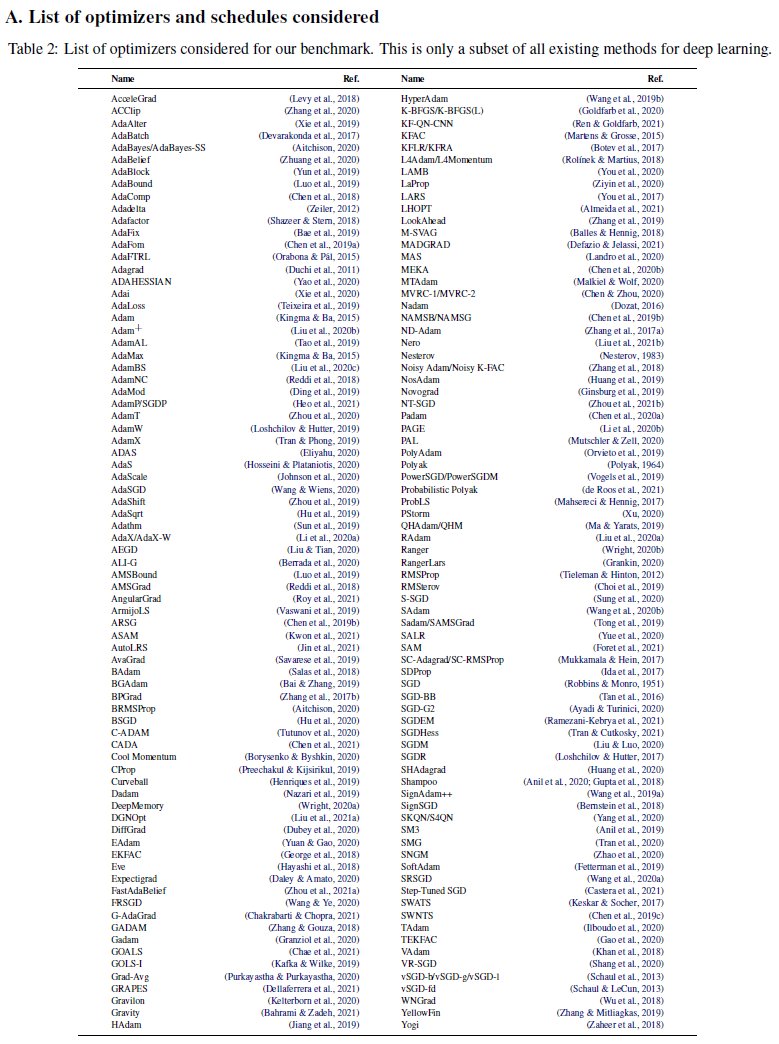

Benchmarking Optimization Algorithms

The Problem

Benchmarking Algorithms in Practice

Choosing the best algorithm to solve an optimization problem often depends on:

- The data scale, conditioning

- The objective parameters regularization

- The implementation complexity, language

An impartial selection requires a time-consuming benchmark!

The goal of benchopt is to make this step as easy as possible.

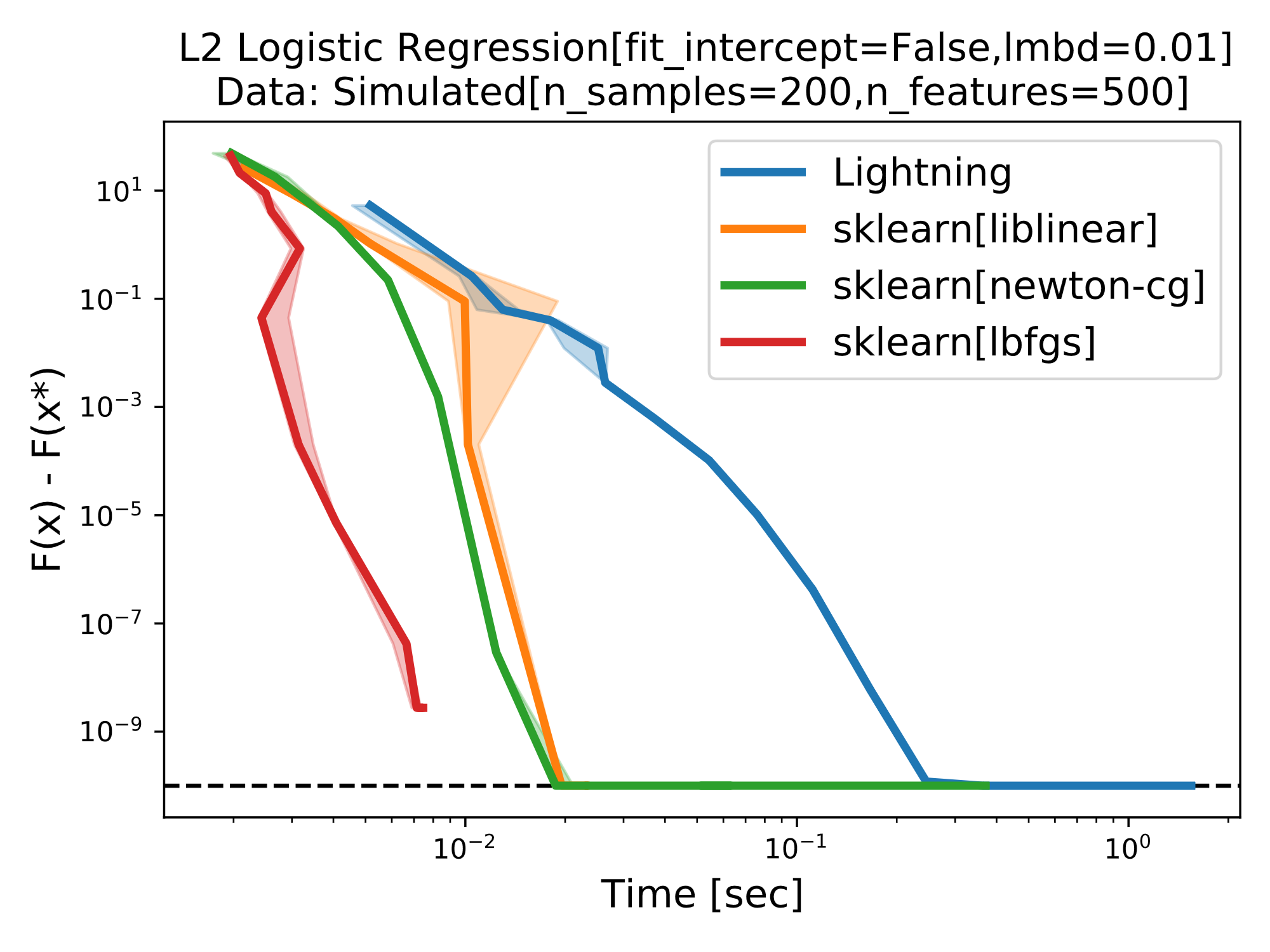

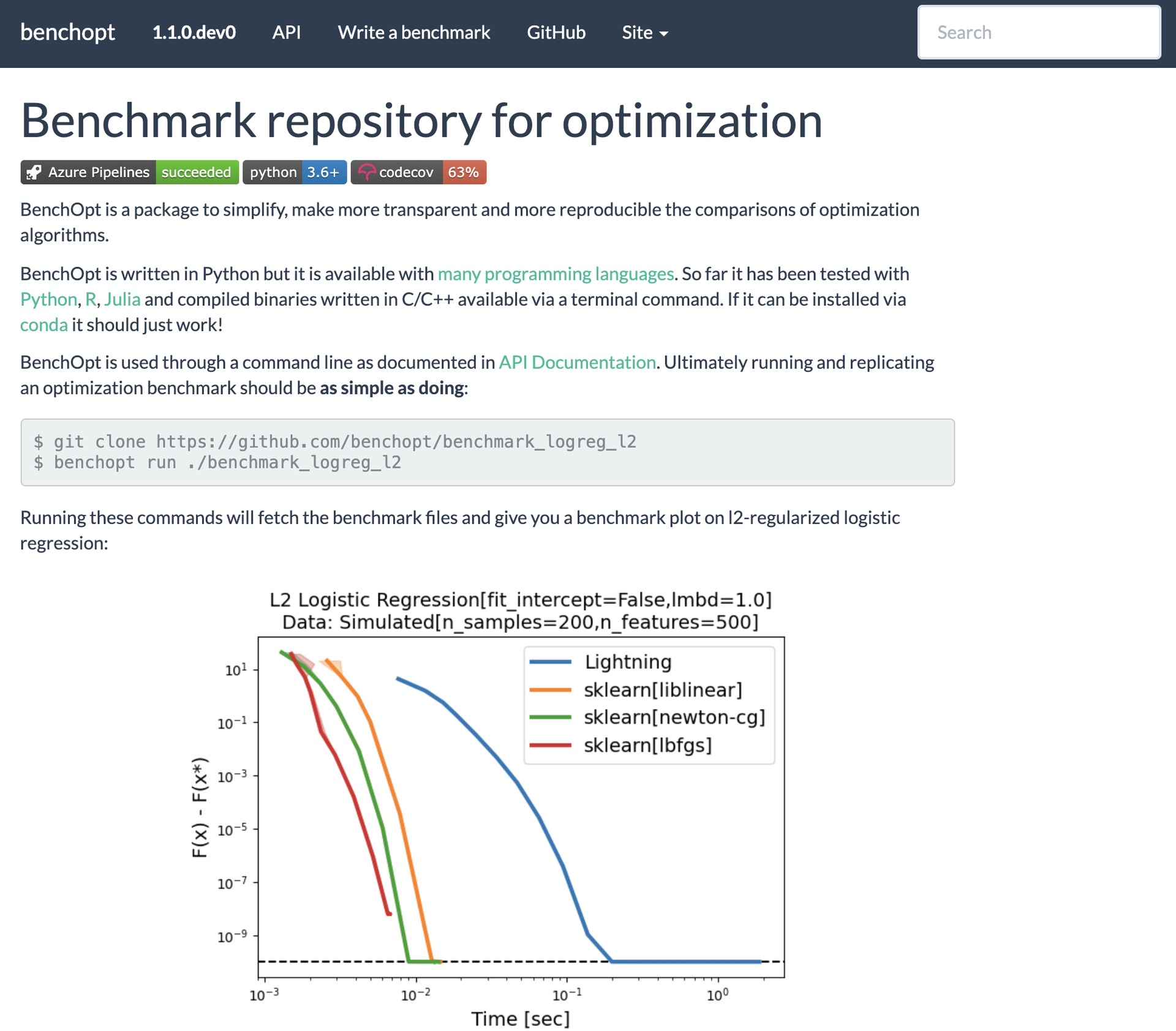

Doing a benchmark for the \(\ell_2\) regularized logistic regression with multiple solvers and datasets is now easy as calling:

benchopt: Language Comparison

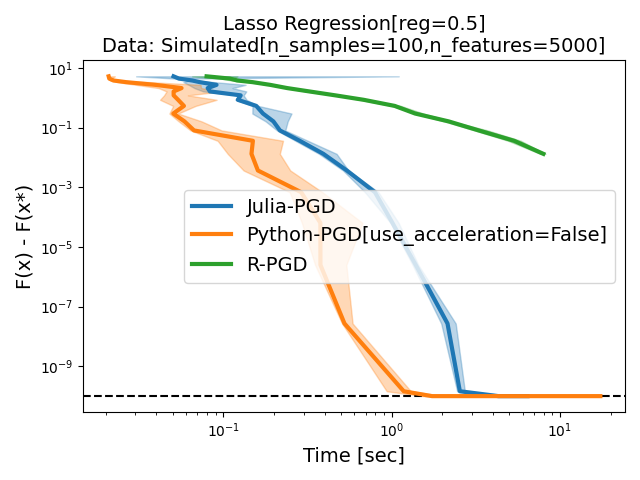

benchopt can also compare the same algorithm in different languages.

Here is an example comparing PGD in: Python; R; Julia.

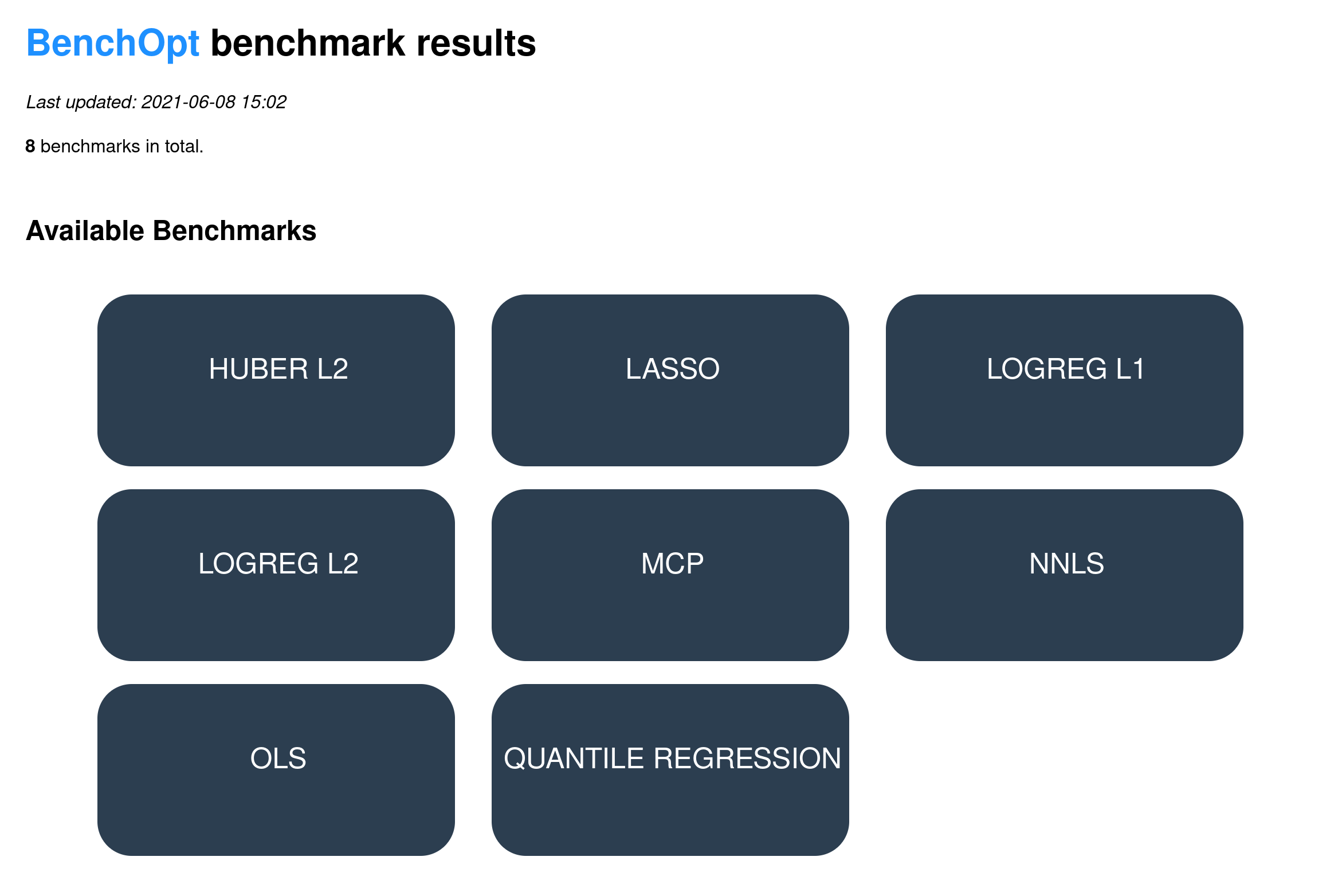

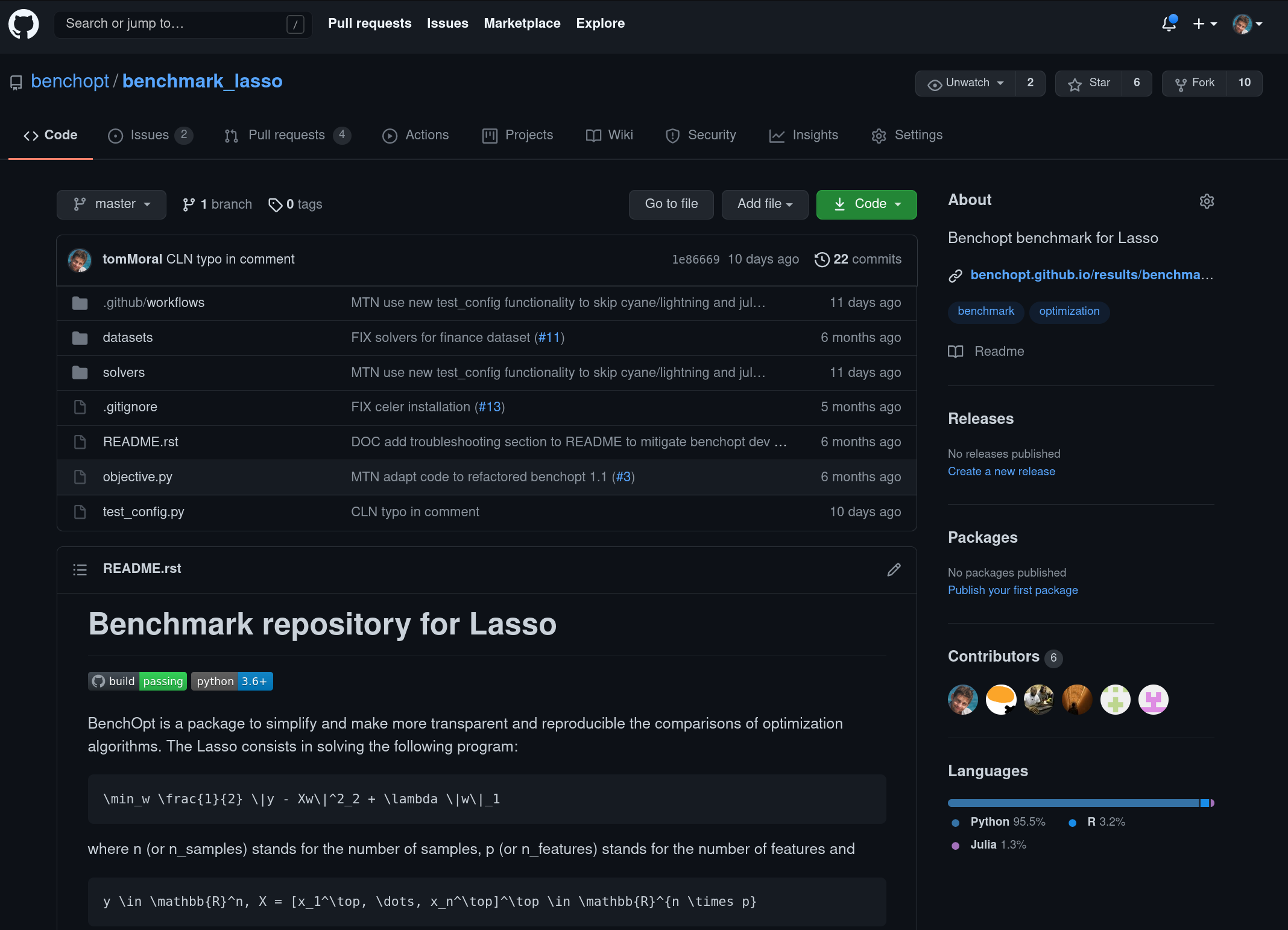

benchopt: Publishing Results

benchopt also allows easy publishing of benchmark results:

Benchmark Example

Benchmark: Principle

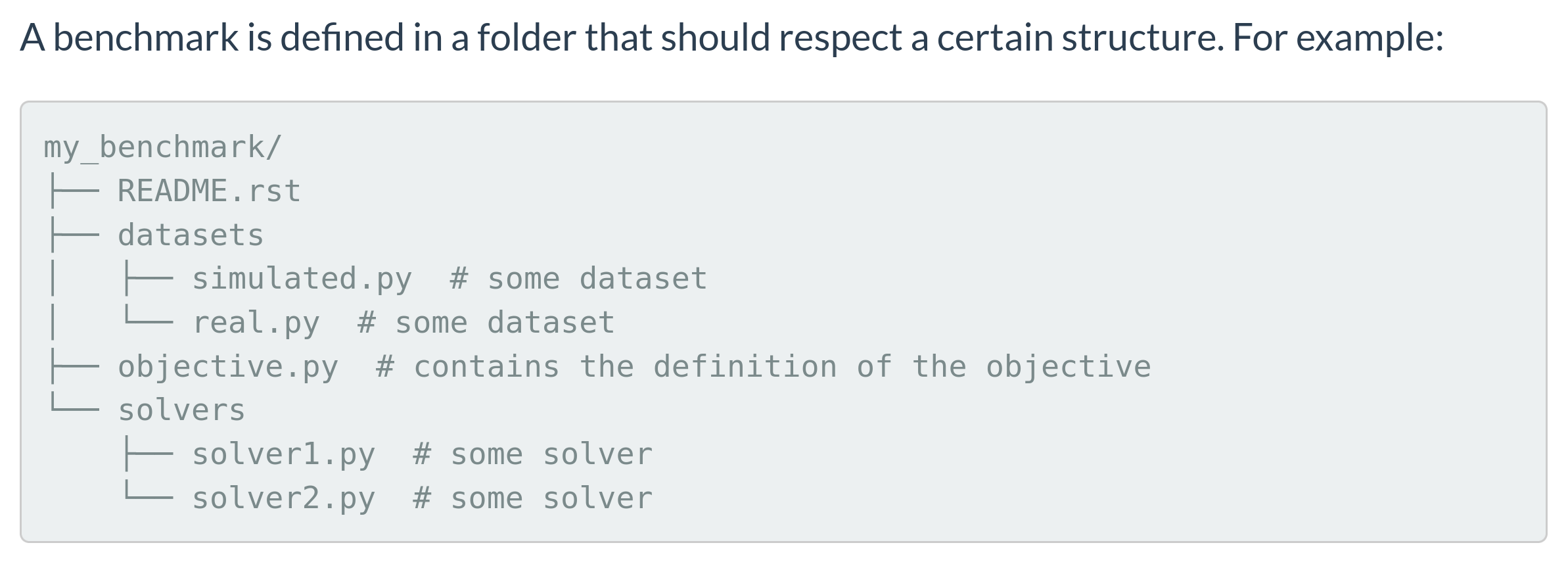

A benchmark is a directory with:

- An

objective.pyfile with anObjective - A directory

solverswith one file perSolver - A directory

datasetswithDatasetgenerators/fetchers

The benchopt client runs a cross product and generates a CSV file + convergence plots like above.

Benchmark: Objective & Dataset

class Objective(BaseObjective):

name = "Benchmark Name"

def set_data(self, X, y):

# Store data

def compute(self, beta):

return dict(obj1=.., obj2=..)

def to_dict(self):

return dict(X=.., y=.., reg=..)Benchmark: Solver

class Solver(BaseSolver):

name = "Solver Name"

def set_objective(self, X, y, reg):

# Store objective info

def run(self, n_iter):

# Run computations for n_iter

def get_result(self):

return betaFlexible API

get_dataandset_objectiveallow compatibility between packages.n_itercan be replaced with a tolerance or a callback.

benchopt

benchopt: Making Tedious Tasks Easy

Automatizing tasks:

- Automatic installation of competitors’ solvers.

- Parametrized datasets, objectives, and solvers, and run on cross products.

- Quantify the variance.

- Automatic caching.

- First visualization of the results.

- Automatic parallelization, … ?

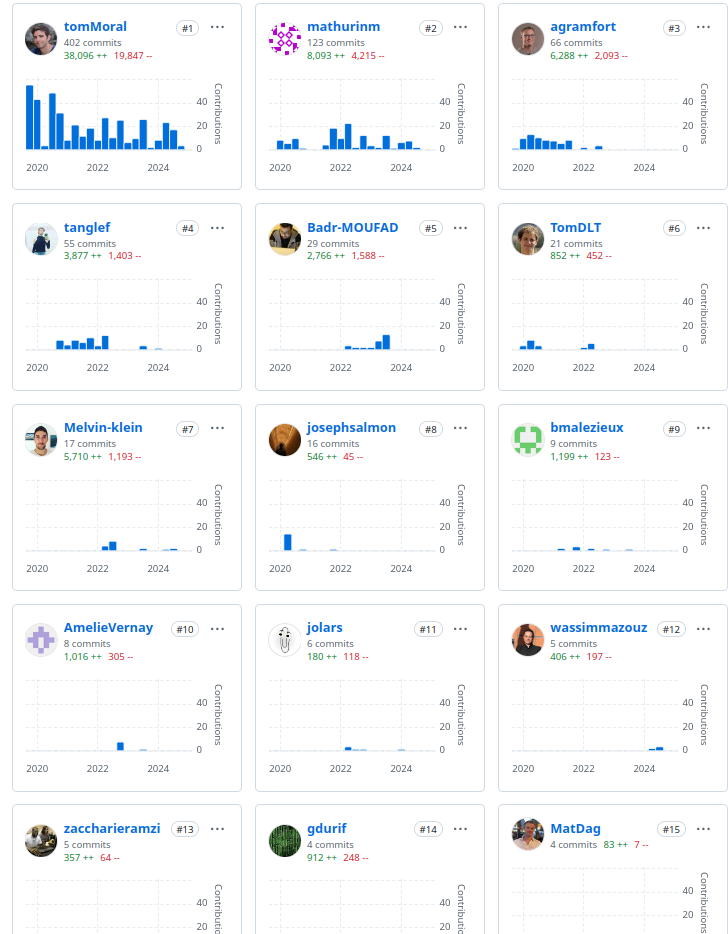

Bus Factor

1 and half:

Mostly Thomas, Mathurin could revive it needed.

Credits (core package, but does not reflect all)

Credits with pictures

and more persons, but those where the only one with pictures at hand!